NVIDIA Corporation (NASDAQ:NVDA)

For the better part of three decades, NVIDIA has maintained its position as a pioneer in the field of technological advancement. The company has achieved major advancements in both graphics processing units (GPUs) and artificial intelligence (AI), two areas in which it has focused its attention. As a result, the company has created products that have transformed the way in which we use computers.

Piper Sandler has more faith in Nvidia’s proprietary set of artificial intelligence software tools as time goes on.

On Wednesday, research analyst Harsh Kumar reaffirmed his Overweight rating on Nvidia stock (NASDAQ:NVDA) and his $300 stock-price projection for the company.

He wrote that “we feel that [Nvidia’s] business will continue its acceleration as the year rolls on due to the existing tailwinds related to AI and data-center expansion.” This is due to the fact that “we feel that [Nvidia’s] business will continue its acceleration as the year rolls on.” “Throughout the entirety of our conversations, we continue to find that the key differentiator for Nvidia is the CUDA platform and how it integrates with the surrounding NVDA infrastructure.”

The CUDA programming language is exclusive to the chips manufactured by Nvidia and can only be used with those chips.

According to our investigations, it seems that the entire business is picking up steam and has continued to improve throughout the first fiscal quarter.

During trading on Wednesday, the price of Nvidia stock remained unchanged, despite an overall fall in the market.

Chips made by Nvidia have great potential for use in generative artificial intelligence, which has become increasingly popular this year. In order to generate content, the system uses a method known as “brute force” to inject text, photos, and videos. Late in 2017, OpenAI published ChatGPT, which spurred a resurgence of interest in this particular type of artificial intelligence.

According to the industry analyst, Nvidia has a significant advantage over possible competitors due to the fact that developers have invested a significant amount of time and resources learning how to utilize the tools, language, and software provided by the business. Because of their extensive commitment to the ecosystem maintained by the corporation, coders find it difficult to transition to other chips.

According to what he wrote, “The primary business goal for Nvidia is to build an accelerated computing system that is superior to those of our competitors.” The fact that CUDA is “exclusive to Nvidia hardware” enables the company to maintain a competitive advantage among developers.

The opinions of Wall Street analysts about Nvidia are generally favorable. According to FactSet, over 70 percent of analysts rate the company as Buy or a similar rating.

Over the past few years, NVIDIA has collaborated on AI projects with a number of industry leaders, including Amazon, Microsoft, and IBM, as part of a number of different partnership arrangements. NVIDIA has been able to expand its reach in the artificial intelligence sector and strengthen its position as a top provider of AI technology with the assistance of these partnerships.

The cooperation between NVIDIA and Amazon Web Services (AWS) is one of the company’s most important and fruitful ones. AWS announced in 2018 the availability of the Amazon Elastic Inference service, which enables clients to attach GPU-powered inference acceleration to any Amazon EC2 instance. This capability was made possible by AWS’s Elastic Compute Cloud (EC2). Customers are provided with a highly performant and cost-effective alternative for deploying artificial intelligence applications thanks to this service, which optimizes deep learning models for inference on NVIDIA GPUs through the usage of NVIDIA TensorRT software.

NVIDIA has produced a number of new products, in addition to the partnerships it has formed, which have further strengthened its position in the artificial intelligence sector. One of these items is the NVIDIA A100 GPU, which made its debut at the May 2020 announcement. When compared to its predecessor, the Volta V100, the NVIDIA A100 Graphics Processing Unit (GPU) is up to 20 times more powerful than its predecessor.

The A100 graphics processing unit was developed specifically for use in large-scale artificial intelligence workloads, such as those that are typical in cloud computing and data center environments. It is equipped with NVIDIA’s third-generation Tensor Cores, which offer performance that is up to six times higher than that of the generation before it. Support for multi-instance GPU (MIG) technology is also included in the A100. This technology enables users to divide the GPU into as many as seven distinct instances, each of which has its own set of dedicated resources.

The Development of AI in the Near Future and NVIDIA’s Part in It

In light of what lies ahead, it is quite evident that AI will continue to play an important part in determining the direction that technology will take in the future. There is a good chance that the demand for NVIDIA’s products will continue to increase as the use of AI applications becomes more widespread.

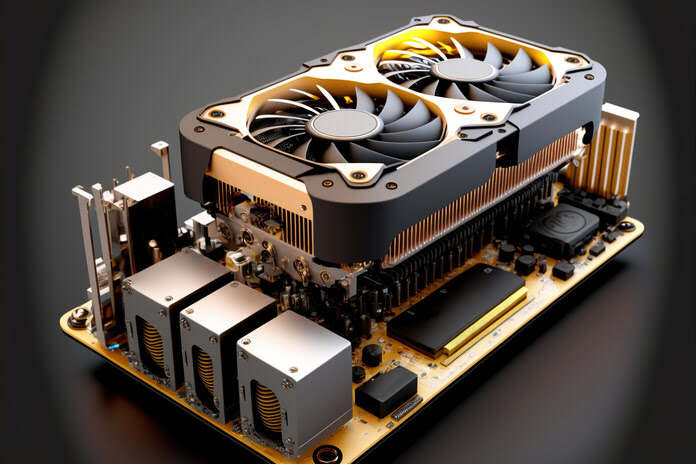

Featured Image: Freepik @ Rawf8.com